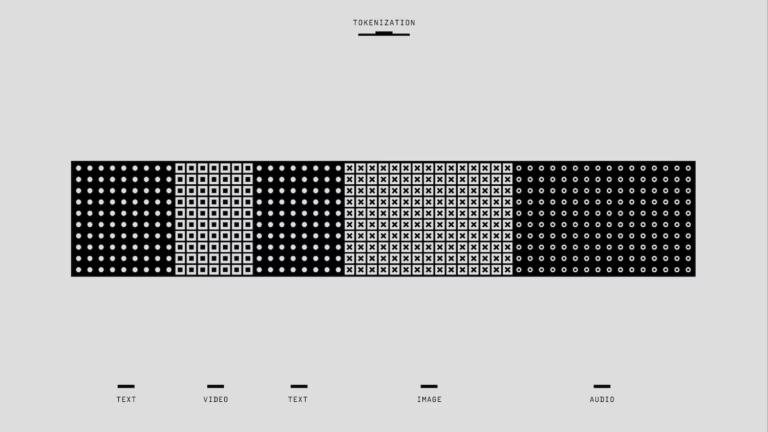

TokenOps: Optimizing Token Usage in LLM API Applications via Pre- and Post-Processing Layers

The adoption of Large Language Models (LLMs) such as GPT-4 and Claude 3 has introduced significant operational challenges, primarily associated with escalating costs, latency, and computational load resulting from excessive token usage. Tokens, beyond mere computational units, represent direct economic and environmental costs. This research presents the TokenOps framework, a dual-layer optimization architecture designed to substantially reduce token usage through strategic pre-processing and post-processing layers. The framework was developed and empirically validated in collaboration with…